📝 27 Oct 2024

Refurbished Ubuntu PCs have become quite affordable ($370 pic above). Can we turn them into a (Low-Cost) Build Farm for Apache NuttX RTOS?

In this article we…

Compile NuttX for a group of Arm32 Boards

Then scale up and compile NuttX for All Arm32 Boards

Thanks to the Docker Image provided by NuttX

Why not do all this in GitHub Actions? It’s free ain’t it?

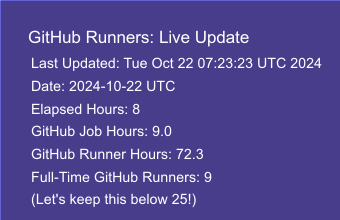

GitHub Actions taught us a Painful Lesson: Freebies Won’t Last Forever!

It’s probably a bad idea to be locked-in and over-dependent on a Single Provider for Continuous Integration. That’s why we’re exploring alternatives…

“[URGENT] Reducing our usage of GitHub Runners”

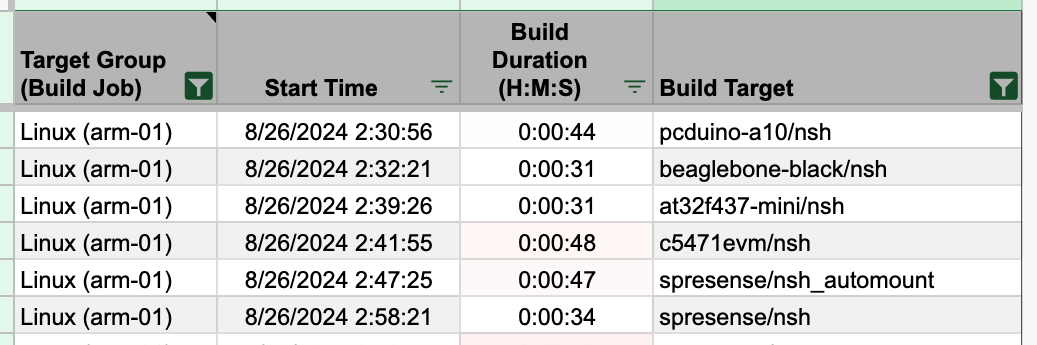

We’re creating a Build Farm that will compile All Boards in All Configurations (pic above)

To do that, we count every single thing that we’re compiling: Targets and Target Groups.

What’s a Target Group?

## Select the NuttX Target and compile it

tools/configure.sh rv-virt:nsh

makeRemember this configure.sh thingy? Let’s call rv-virt:nsh a NuttX Target. Thanks to the awesome NuttX Contributors, we have created 1,594 NuttX Targets.

To compile all 1,594 Targets, we lump them into 30 Target Groups (so they’re easier to track)

Looks familiar? Yep we see these when we Submit a Pull Request.

What’s inside the Target Groups?

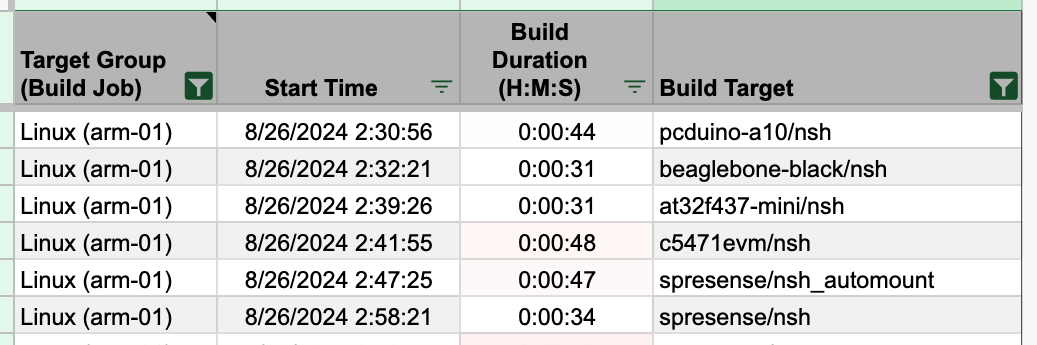

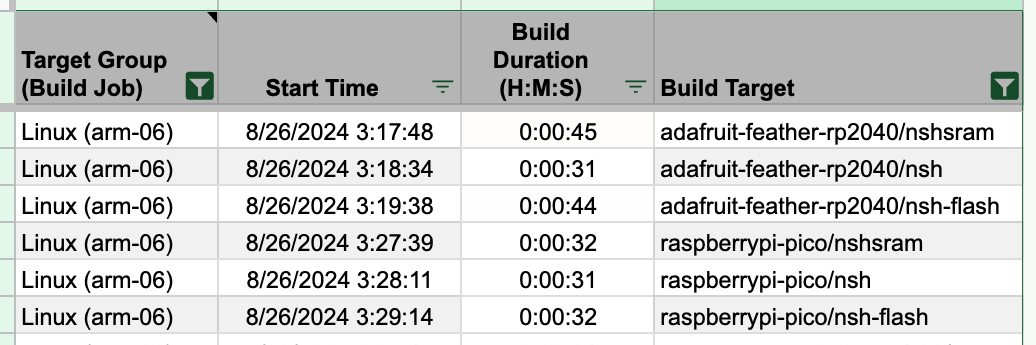

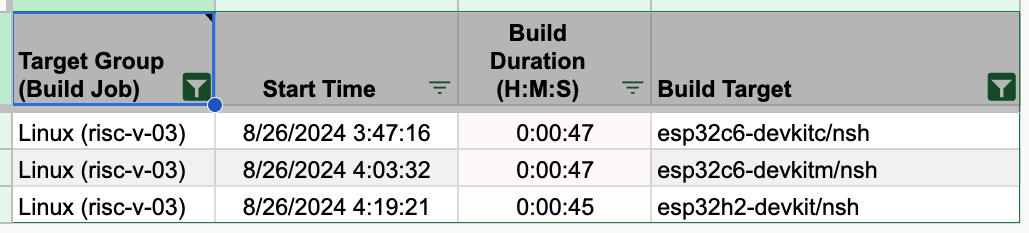

arm-01 has BeagleBone Black and Sony Spresense…

arm-06 has RP2040 Boards…

risc-v-03 has ESP32-C6 and ESP32-H2 Boards…

How are Target Groups defined?

Every NuttX Target has its own defconfig…

$ cd nuttx ; find . -name defconfig

./boards/arm/am335x/beaglebone-black/configs/nsh/defconfig

./boards/arm/cxd56xx/spresense/configs/usbmsc/defconfig

./boards/arm/cxd56xx/spresense/configs/lte/defconfig

./boards/arm/cxd56xx/spresense/configs/wifi/defconfig

...Thus NuttX uses a Wildcard Pattern to select the defconfig (which becomes a NuttX Target): tools/ci/testlist/arm-05.dat

## Target Group arm-05 contains:

## boards/arm/[m-q]*/*/configs/*/defconfig

/arm/[m-q]*,CONFIG_ARM_TOOLCHAIN_GNU_EABI

## Compile the above Targets

## with `make` and GCC Toolchain

## Except for these:

## Compile with CMake instead

CMake,arduino-nano-33ble:nsh

## Exclude this Target from the build

-moxa:nshWe’re ready to build the Target Groups…

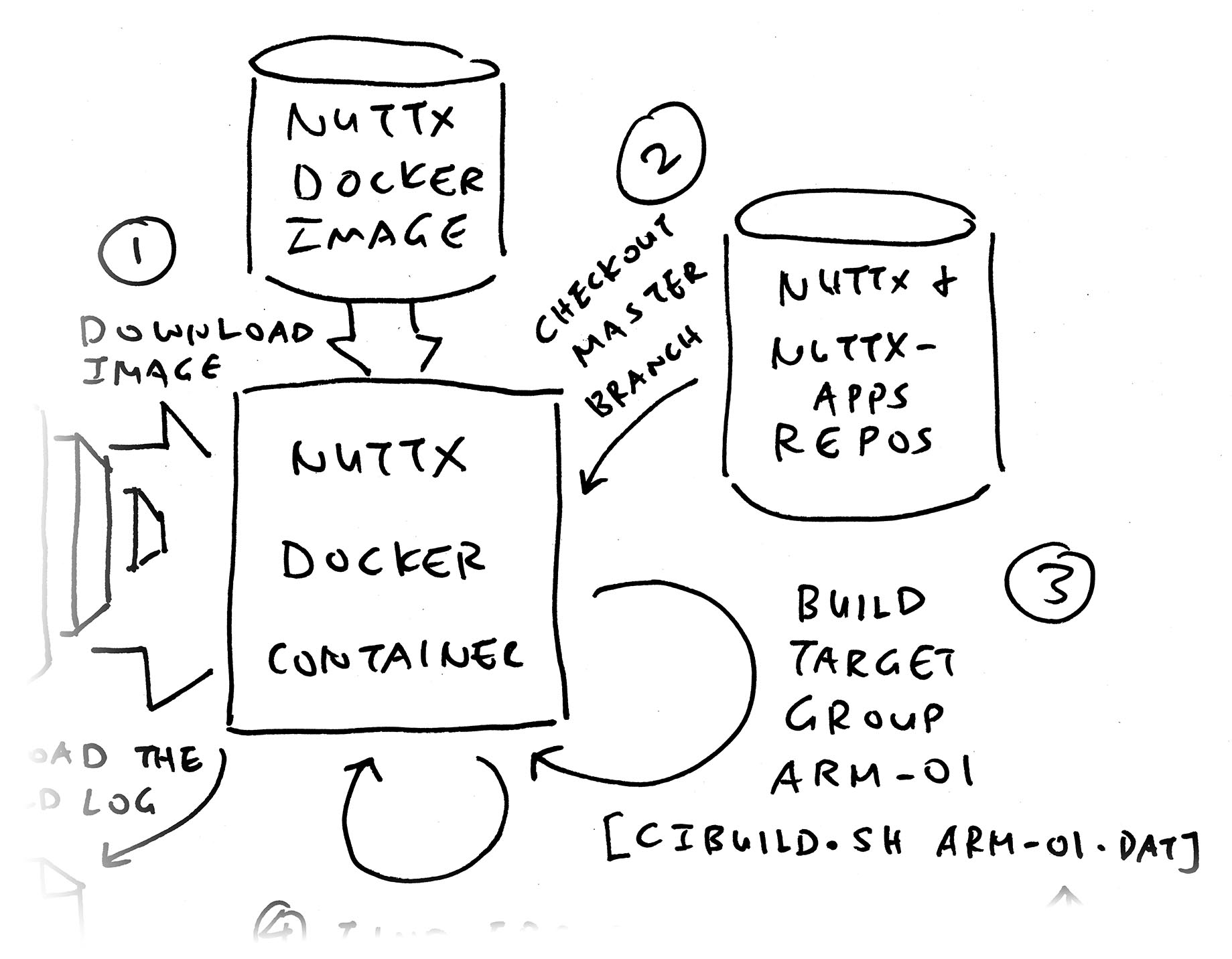

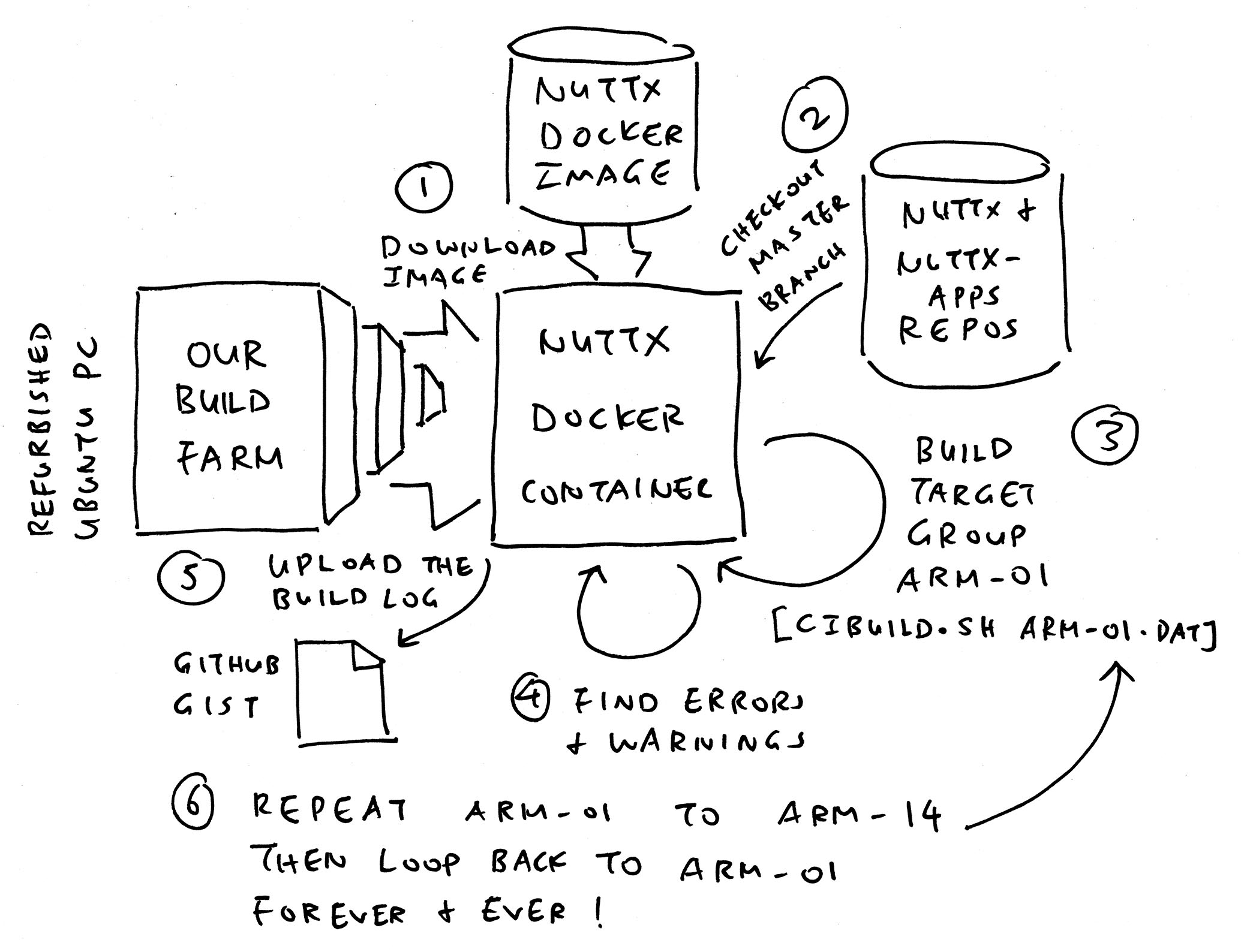

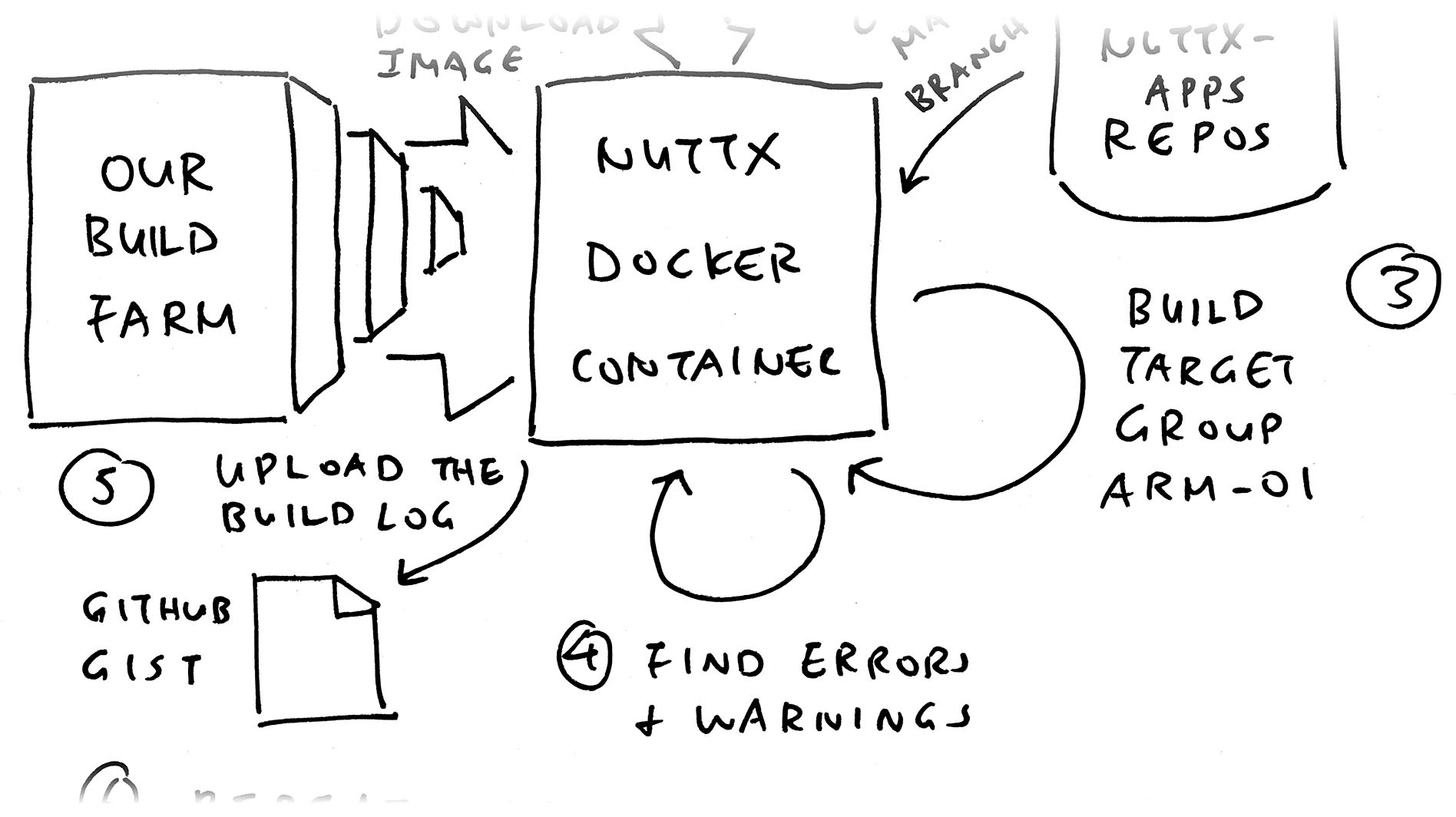

Suppose we wish to compile the NuttX Targets inside Target Group arm-01…

Here are the steps for Ubuntu x64…

Install Docker Engine

Download the Docker Image for NuttX

sudo docker pull \

ghcr.io/apache/nuttx/apache-nuttx-ci-linux:latestStart the Docker Container

sudo docker run -it \

ghcr.io/apache/nuttx/apache-nuttx-ci-linux:latest \

/bin/bash -c "..."Check out the master branch of nuttx repo

git clone \

https://github.com/apache/nuttxDo the same for nuttx-apps repo

git clone \

https://github.com/apache/nuttx-apps \

appsInside the Docker Container: Build the Targets for arm-01

cd nuttx/tools/ci

./cibuild.sh \

-c -A -N -R \

testlist/arm-01.datWait for arm-01 to complete, then clean up

(About 1.5 hours. That’s 15 mins slower than GitHub Actions)

## Optional: Free up the Docker disk space

## Warning: Will delete all Docker Containers currently NOT running!

sudo docker system prune --forcePut everything together: run-job.sh

## Build a NuttX Target Group with Docker

## Parameter is the Target Group, like "arm-01"

job=$1

## TODO: Install Docker Engine

## https://docs.docker.com/engine/install/ubuntu/

## TODO: For WSL, we may need to install Docker on Native Windows

## https://github.com/apache/nuttx/issues/14601#issuecomment-2453595402

## Download the Docker Image for NuttX

sudo docker pull \

ghcr.io/apache/nuttx/apache-nuttx-ci-linux:latest

## Inside the Docker Container:

## Build the Target Group

sudo docker run -it \

ghcr.io/apache/nuttx/apache-nuttx-ci-linux:latest \

/bin/bash -c "

cd ;

pwd ;

git clone https://github.com/apache/nuttx ;

git clone https://github.com/apache/nuttx-apps apps ;

pushd nuttx ; echo NuttX Source: https://github.com/apache/nuttx/tree/\$(git rev-parse HEAD) ; popd ;

pushd apps ; echo NuttX Apps: https://github.com/apache/nuttx-apps/tree/\$(git rev-parse HEAD) ; popd ;

cd nuttx/tools/ci ;

(./cibuild.sh -c -A -N -R testlist/$job.dat || echo '***** BUILD FAILED') ;

"We run it like this (will take 1.5 hours)…

$ sudo ./run-job.sh arm-01

NuttX Source: https://github.com/apache/nuttx/tree/9c1e0d3d640a297cab9f2bfeedff02f6ce7a8162

NuttX Apps: https://github.com/apache/nuttx-apps/tree/52a50ea72a2d88ff5b7f3308e1d132d0333982e8

====================================================================================

Configuration/Tool: pcduino-a10/nsh,CONFIG_ARM_TOOLCHAIN_GNU_EABI

2024-10-20 17:38:10

------------------------------------------------------------------------------------

Cleaning...

Configuring...

Disabling CONFIG_ARM_TOOLCHAIN_GNU_EABI

Enabling CONFIG_ARM_TOOLCHAIN_GNU_EABI

Building NuttX...

arm-none-eabi-ld: warning: /root/nuttx/nuttx has a LOAD segment with RWX permissions

Normalize pcduino-a10/nsh

====================================================================================

Configuration/Tool: beaglebone-black/lcd,CONFIG_ARM_TOOLCHAIN_GNU_EABI

2024-10-20 17:39:09(Ignore “arm-nuttx-eabi-gcc: command not found”)

What about building a Single Target?

Suppose we wish to build ox64:nsh. Just change this…

cd nuttx/tools/ci ;

./cibuild.sh -c -A -N -R testlist/$job.dat ;To this…

cd nuttx ;

tools/configure.sh ox64:nsh ;

make ;What if we’re testing our own repo?

Suppose we’re preparing a Pull Request at github.com/USER/nuttx/tree/BRANCH. Just change this…

git clone https://github.com/apache/nuttx ;To this…

git clone https://github.com/USER/nuttx --branch BRANCH ;How to copy the Compiled Files out of the Docker Container?

This will copy out the Compiled NuttX Binary from Docker…

## Get the Container ID

sudo docker ps

## Fill in the Container ID below

## Works only when the container is still running so, hmmm...

sudo docker cp \

CONTAINER_ID:/root/nuttx/nuttx \

.Now we scale up…

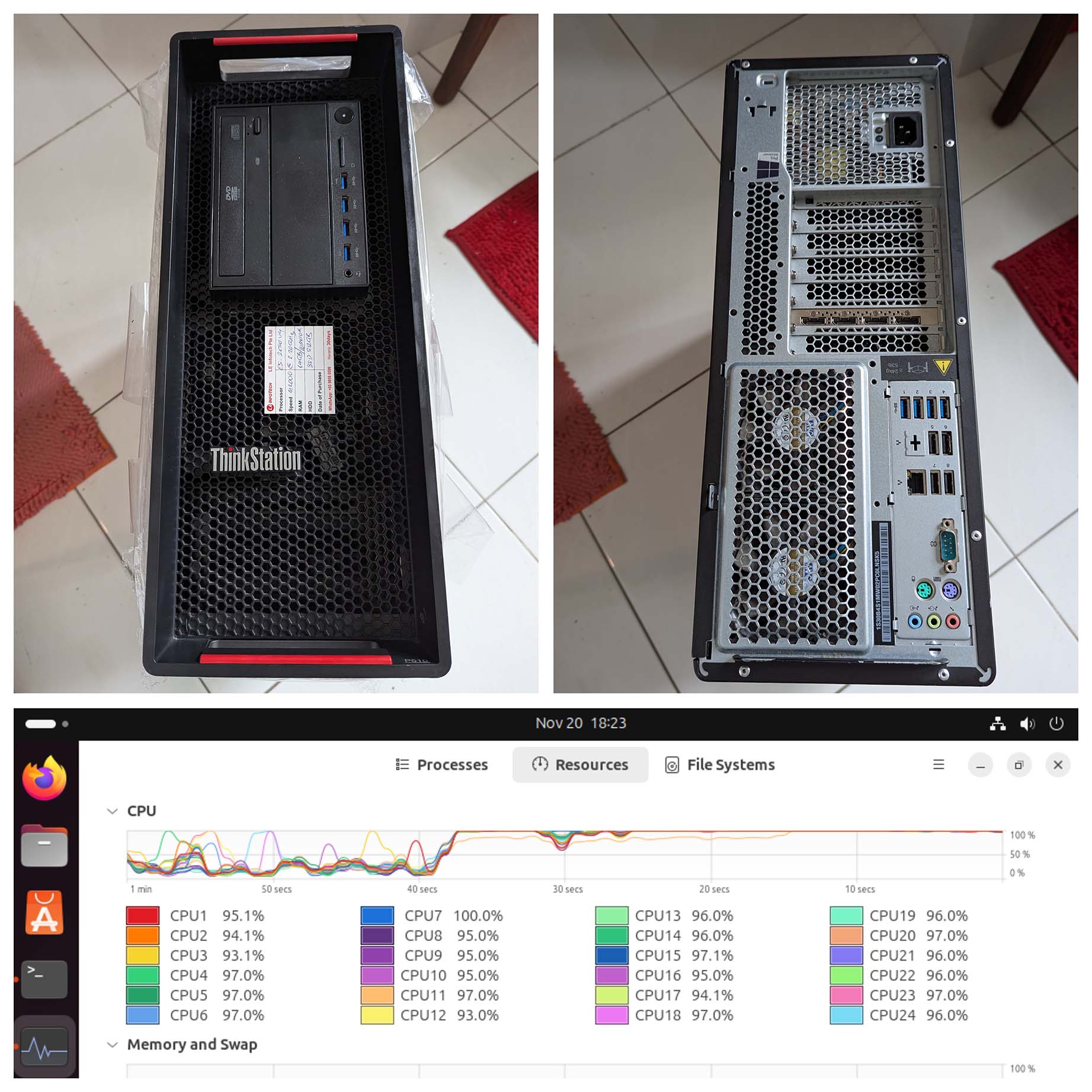

What about compiling NuttX for All Target Groups? From arm-01 to arm-14?

We loop through All Target Groups and compile them…

For Each Target Group:

arm-01 … arm-14

Compile NuttX for the Target Group

Check for Errors and Warnings

Upload the Build Log

Our script becomes more sophisticated: run-ci.sh

## Repeat Forever for All Target Groups

for (( ; ; )); do

for job in \

arm-01 arm-02 arm-03 arm-04 \

arm-05 arm-06 arm-07 arm-08 \

arm-09 arm-10 arm-11 arm-12 \

arm-13 arm-14

do

## Build the Target Group

## and find Errors / Warnings

run_job $job

clean_log

find_messages

## Get the hashes for NuttX and Apps

nuttx_hash=$(grep --only-matching -E "nuttx/tree/[0-9a-z]+" $log_file | grep --only-matching -E "[0-9a-z]+$")

apps_hash=$(grep --only-matching -E "nuttx-apps/tree/[0-9a-z]+" $log_file | grep --only-matching -E "[0-9a-z]+$")

## Upload the log

## https://gist.github.com/nuttxpr

upload_log $job $nuttx_hash $apps_hash

sleep 10

done

## Free up the Docker disk space

sudo docker system prune --force

doneWe run our Build Farm like this…

## Download the scripts

git clone https://github.com/lupyuen/nuttx-release

cd nuttx-release

## Login to GitHub in Headless Mode

sudo apt install gh

sudo gh auth login

## (1) What Account: "GitHub.com"

## (2) Preferred Protocol: "HTTPS"

## (3) Authenticate GitHub CLI: "Login with a web browser"

## (4) Copy the One-Time Code, press Enter

## (5) Press "q" to quit the Text Browser that appears

## (6) Switch to Firefox Browser and load https://github.com/login/device

## (7) Enter the One-Time Code. GitHub Login will proceed.

## See https://stackoverflow.com/questions/78890002/how-to-do-gh-auth-login-when-run-in-headless-mode

## Run the Build Job forever: arm-01 ... arm-14

sudo ./run-ci.sh

## To run Mutiple Build Jobs: Repeat these steps...

## tmux

## cd $HOME/nuttx-release && sudo sh -c '. ../gitlab-token.sh && ./run-ci.sh '$TMUX_PANE

## To monitor Multiple Build Jobs:

## for (( ; ; )) do

## clear

## ps aux | grep bash | grep run-job.sh | colrm 1 109 | sort

## ps aux | grep bash | grep run-job-special.sh | colrm 1 98 | sort

## set -e

## date ; sleep 10

## set +e

## done(Also works for GitLab Snippets)

How does it work?

Inside our script, run_job will compile a single Target Group: run-ci.sh

## Build the Target Group, like "arm-01"

function run_job {

local job=$1

pushd /tmp

script $log_file \

$script_option \

"$script_dir/run-job.sh $job"

popd

}Which calls the script we’ve seen earlier: run-job.sh

upload_log will upload the log (to GitHub Gist) for further processing (and alerting): run-ci.sh

(Now we have a NuttX Dashboard!)

## Upload to GitHub Gist.

## For Safety: We should create a New GitHub Account for publishing Gists.

## https://gist.github.com/nuttxpr

function upload_log {

local job=$1

local nuttx_hash=$2

local apps_hash=$3

## TODO: Change this to use GitHub Token for our new GitHub Account

cat $log_file | \

gh gist create \

--public \

--desc "[$job] CI Log for nuttx @ $nuttx_hash / nuttx-apps @ $apps_hash" \

--filename "ci-$job.log"

}

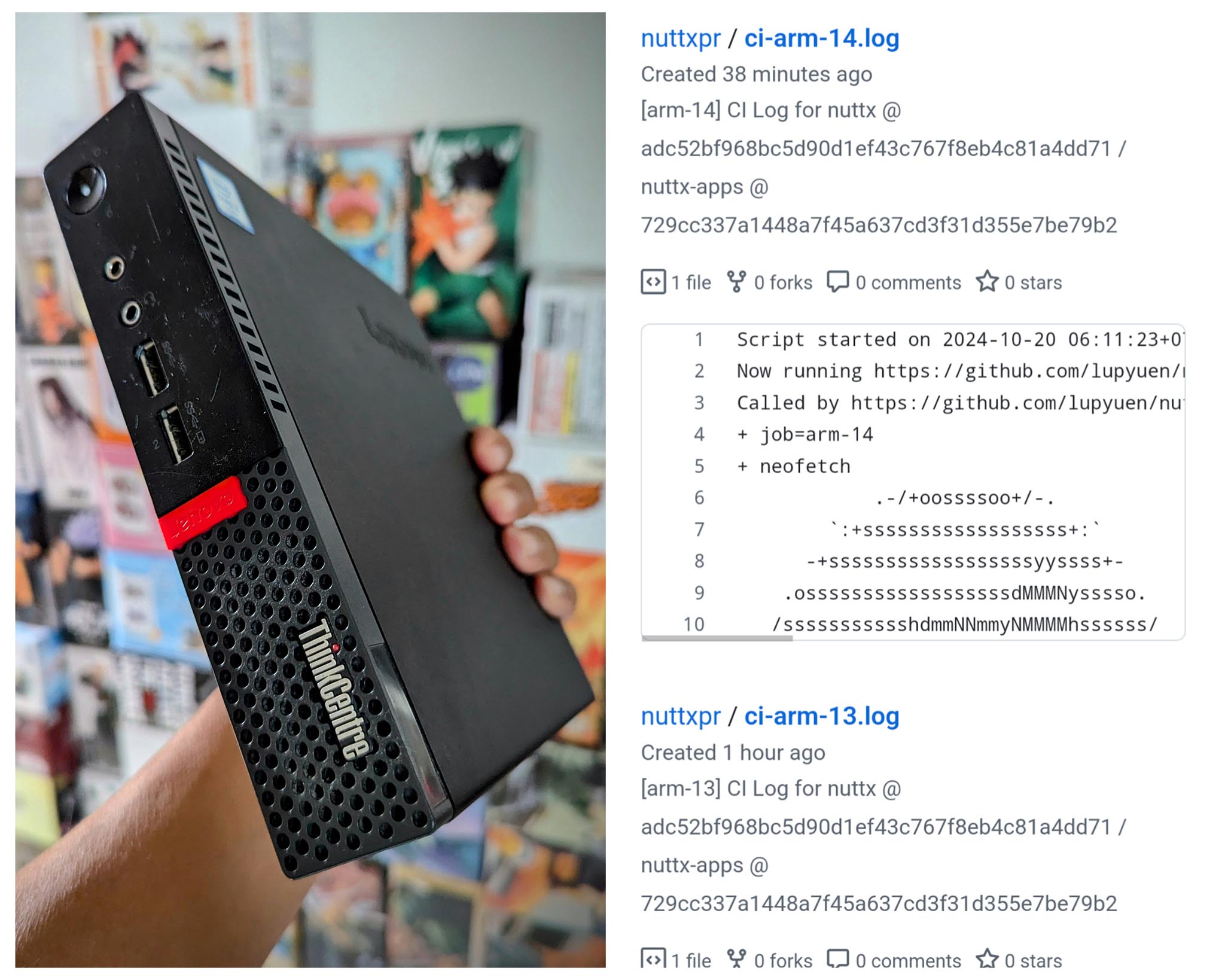

The whole thing (arm-01 … arm-14) will take 17.5 Hours to complete on our Refurbished Intel i5 PC.

(Constrained by CPU, not RAM or I/O. Pic above)

Something quirky about about Errors and Warnings…

In the script above, we call find_messages to search for Errors and Warnings: run-ci.sh

## Search for Errors and Warnings

function find_messages {

local tmp_file=/tmp/release-tmp.log

local msg_file=/tmp/release-msg.log

local pattern='^(.*):(\d+):(\d+):\s+(warning|fatal error|error):\s+(.*)$'

grep '^\*\*\*\*\*' $log_file \

> $msg_file

grep -P "$pattern" $log_file \

| uniq \

>> $msg_file

cat $msg_file $log_file >$tmp_file

mv $tmp_file $log_file

}Which will insert the Errors and Warnings into the top of the Log File.

Why the funny Regex Pattern?

The Regex Pattern above is the same one that NuttX uses to detect errors in our Continuous Integration builds: .github/gcc.json

## Filename : Line : Col : warning/error : Message

^(.*):(\d+):(\d+):\s+(warning|fatal error|error):\s+(.*)$Which will match and detect GCC Compiler Errors like…

chip/stm32_gpio.c:41:11: warning: CONFIG_STM32_USE_LEGACY_PINMAP will be deprecatedBut it won’t match CMake Errors like this!

CMake Warning at cmake/nuttx_kconfig.cmake:171 (message):

Kconfig Configuration Error: warning: STM32_HAVE_HRTIM1_PLLCLK (defined at

arch/arm/src/stm32/Kconfig:8109) has direct dependencies STM32_HRTIM &&

ARCH_CHIP_STM32 && ARCH_ARM with value n, but is currently being y-selectedAnd Linker Errors…

arm-none-eabi-ld: /root/nuttx/staging//libc.a(lib_arc4random.o): in function `arc4random_buf':

/root/nuttx/libs/libc/stdlib/lib_arc4random.c:111:(.text.arc4random_buf+0x26): undefined reference to `clock_systime_ticks'Also Network and Timeout Errors…

curl: (6) Could not resolve host: github.com

make[1]: *** [open-amp.defs:59: open-amp.zip] Error 6We might need to tweak the Regex Pattern and catch more errors.

Huh? Aren’t we making a Build Farm, not a Build Server?

Just add a second Ubuntu PC, partition the Target Groups across the PCs. And we’ll have a Build Farm!

What about macOS?

macOS compiles NuttX a little differently from Linux. (See sim/rpserver_virtio)

BUT… GitHub charges a 10x Premium for macOS Runners. That’s why we shut them down to cut costs. (Pic above)

Probably cheaper to buy our own Refurbished Mac Mini (Intel only), running NuttX Jobs all day?

(Seeking help to port NuttX Jobs to M1 Mac)

We have more stories about NuttX Continuous Integration in these articles…

“Optimising the Continuous Integration for Apache NuttX RTOS (GitHub Actions)”

“Continuous Integration Dashboard for Apache NuttX RTOS (Prometheus and Grafana)”

“Failing a Continuous Integration Test for Apache NuttX RTOS (QEMU RISC-V)”

“(Experimental) Mastodon Server for Apache NuttX Continuous Integration (macOS Rancher Desktop)”

“Test Bot for Pull Requests … Tested on Real Hardware (Apache NuttX RTOS / Oz64 SG2000 RISC-V SBC)”

Many Thanks to my GitHub Sponsors (and the awesome NuttX Community) for supporting my work! This article wouldn’t have been possible without your support.

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…