📝 29 Sep 2024

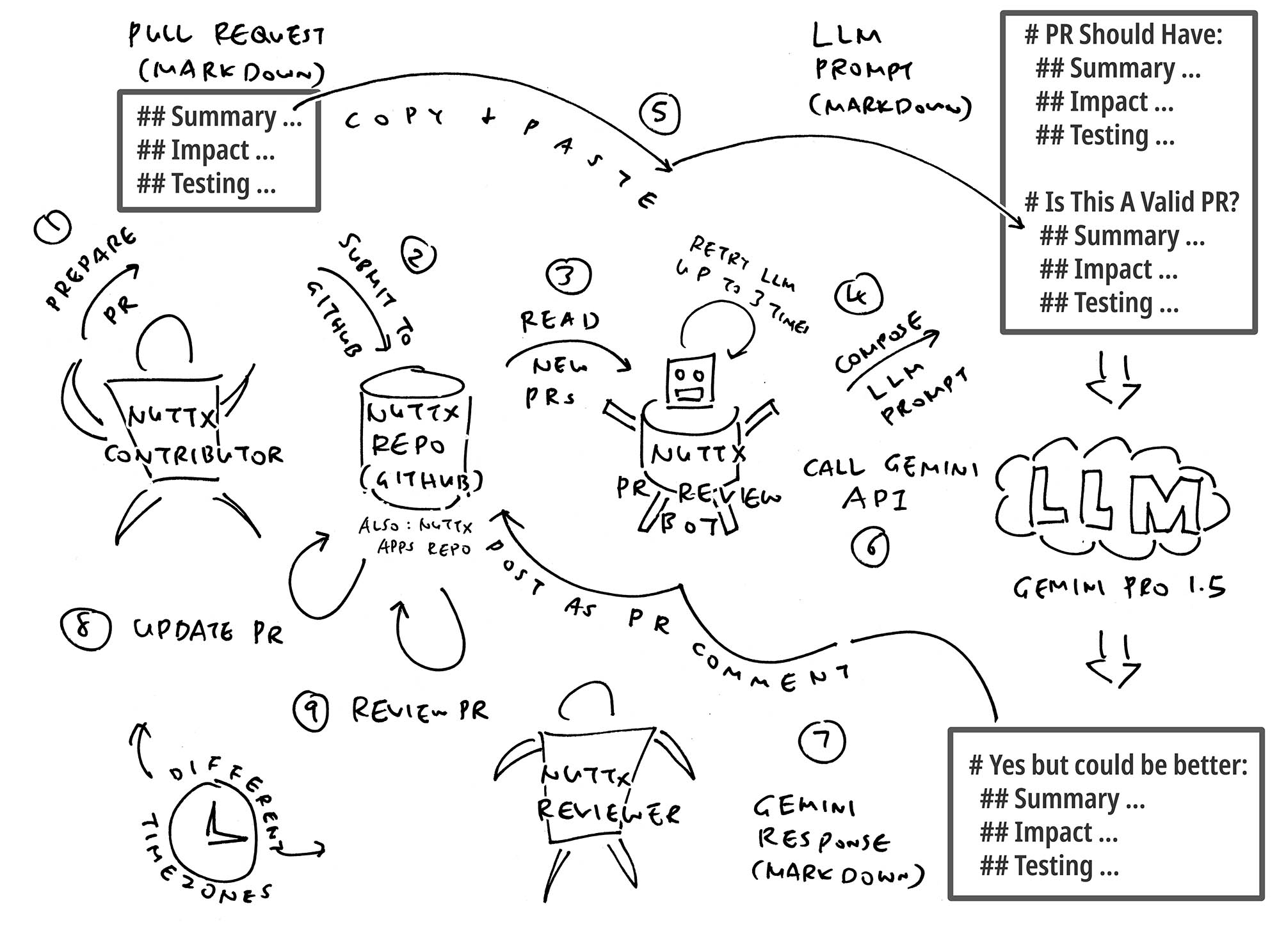

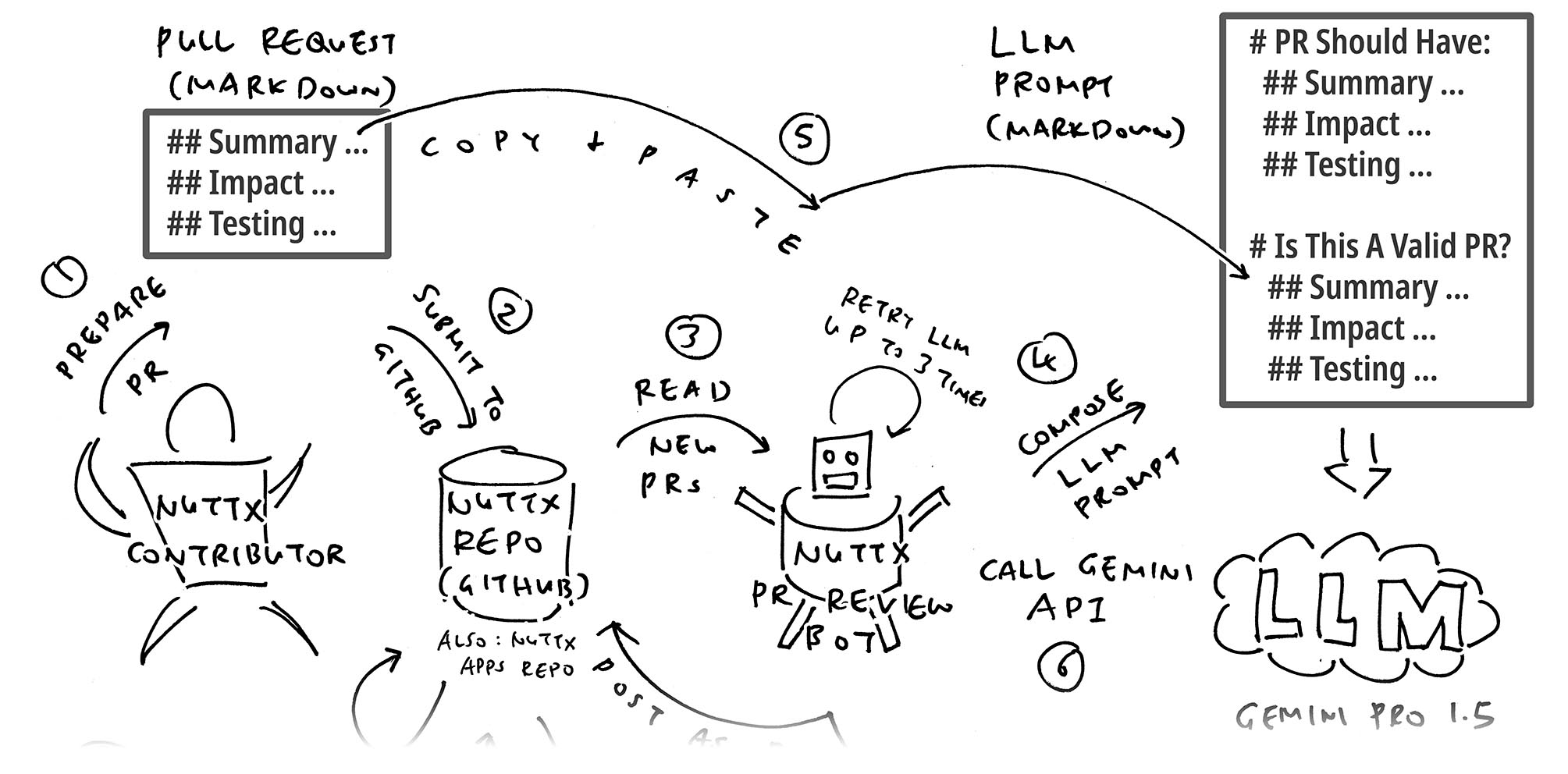

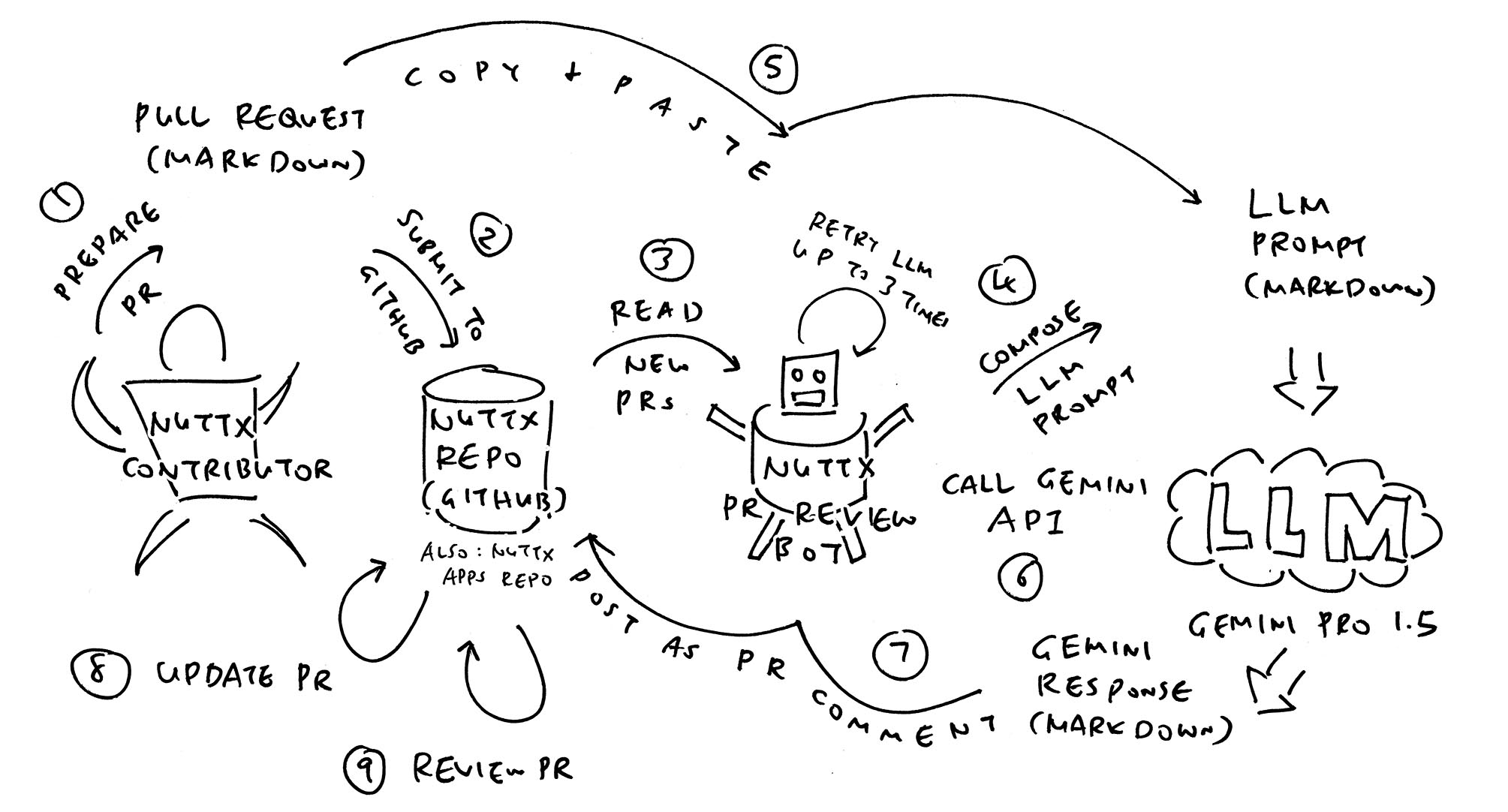

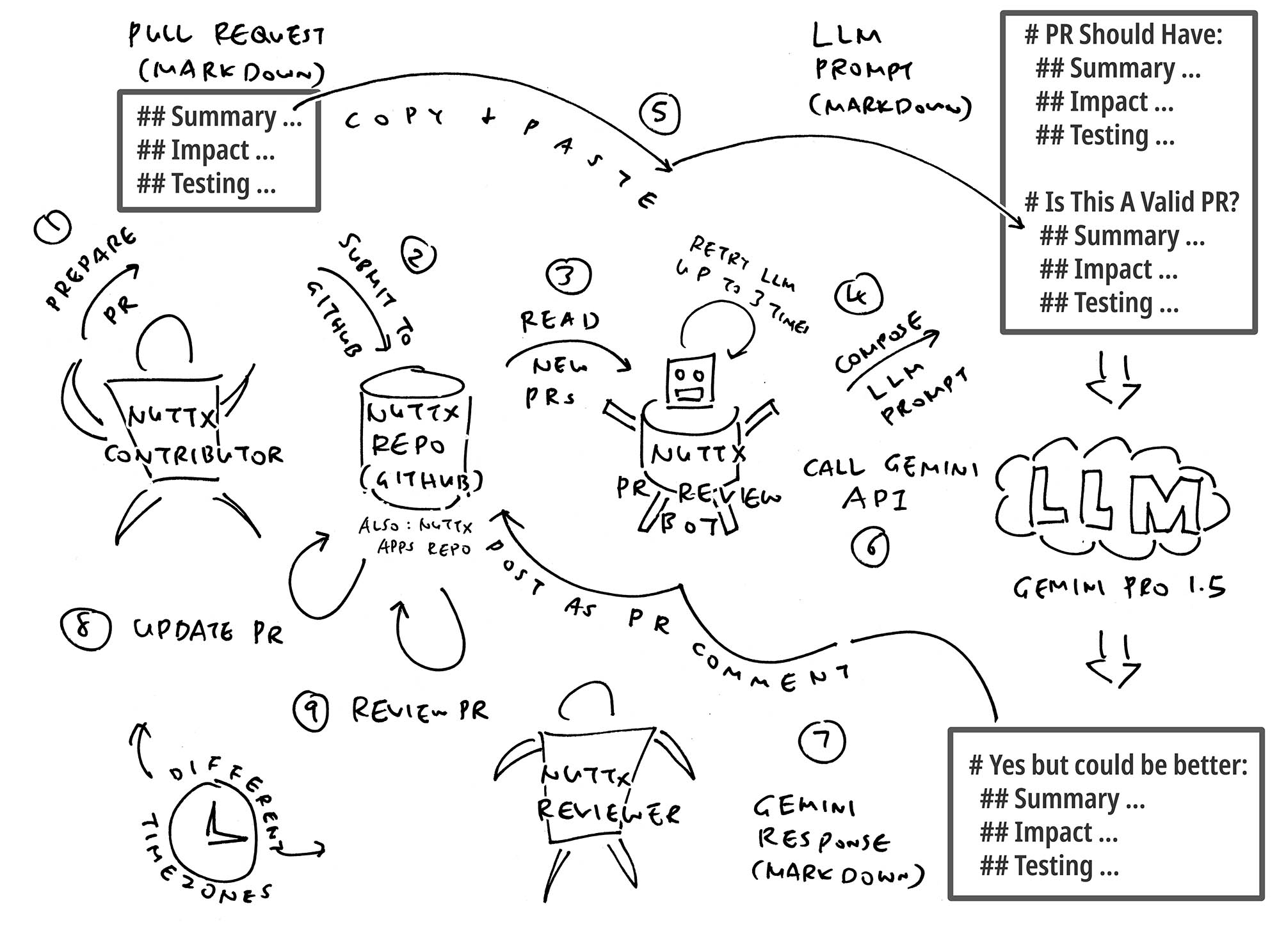

We’re experimenting with an LLM Bot (Large Language Model) that will review Pull Requests for Apache NuttX RTOS.

This article explains how we created the LLM Bot in One Week…

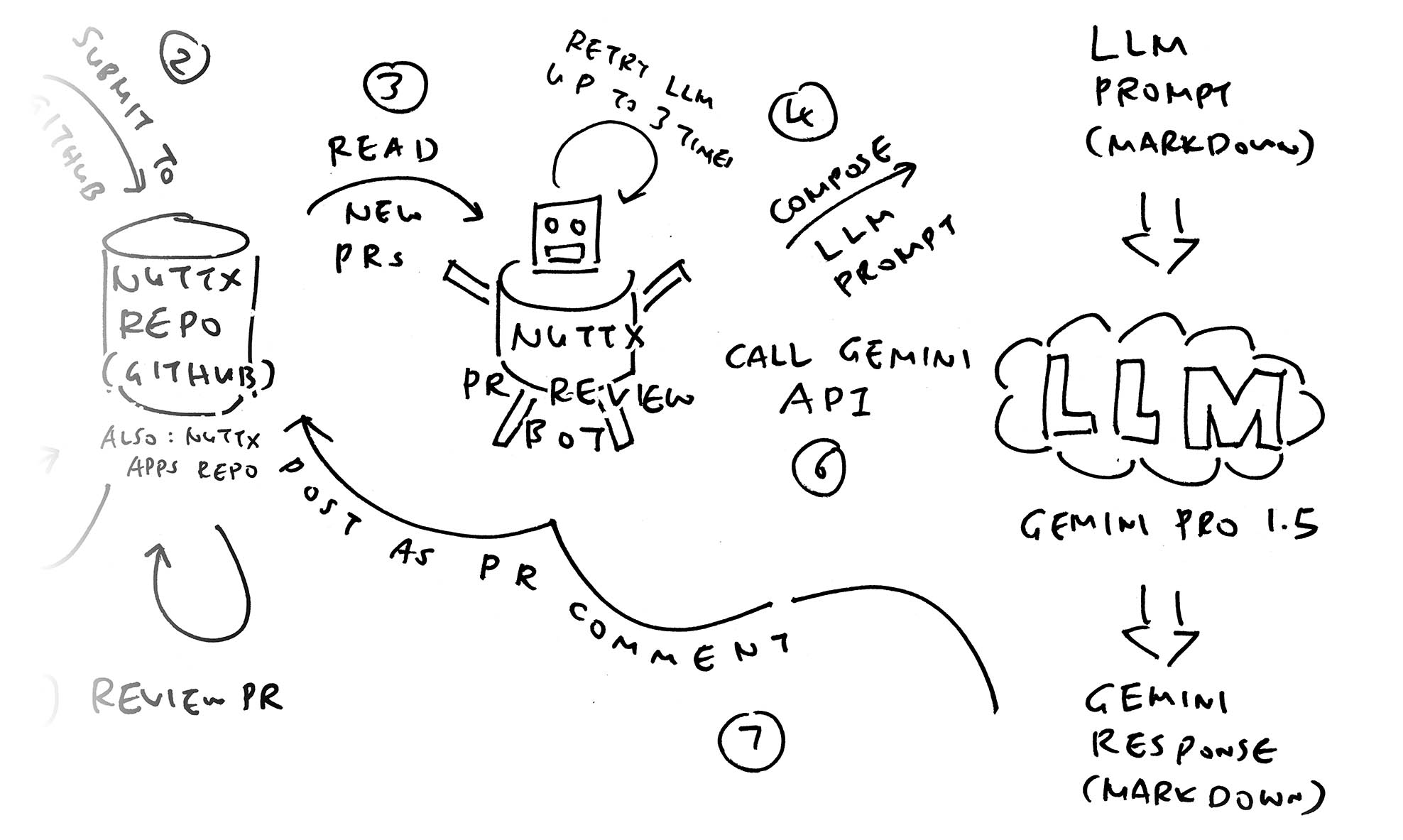

We call GitHub API to fetch NuttX Pull Requests

Append the PR Body to the NuttX PR Requirements

Which becomes the LLM Prompt that we send to Gemini API

Our Bot posts the Gemini Response as a PR Review Comment

Due to quirks in Gemini API: We use Emoji Reactions to limit the API Calls

Though our LLM Bot was Created by Accident

(It wasn’t meant to be an AI Project!)

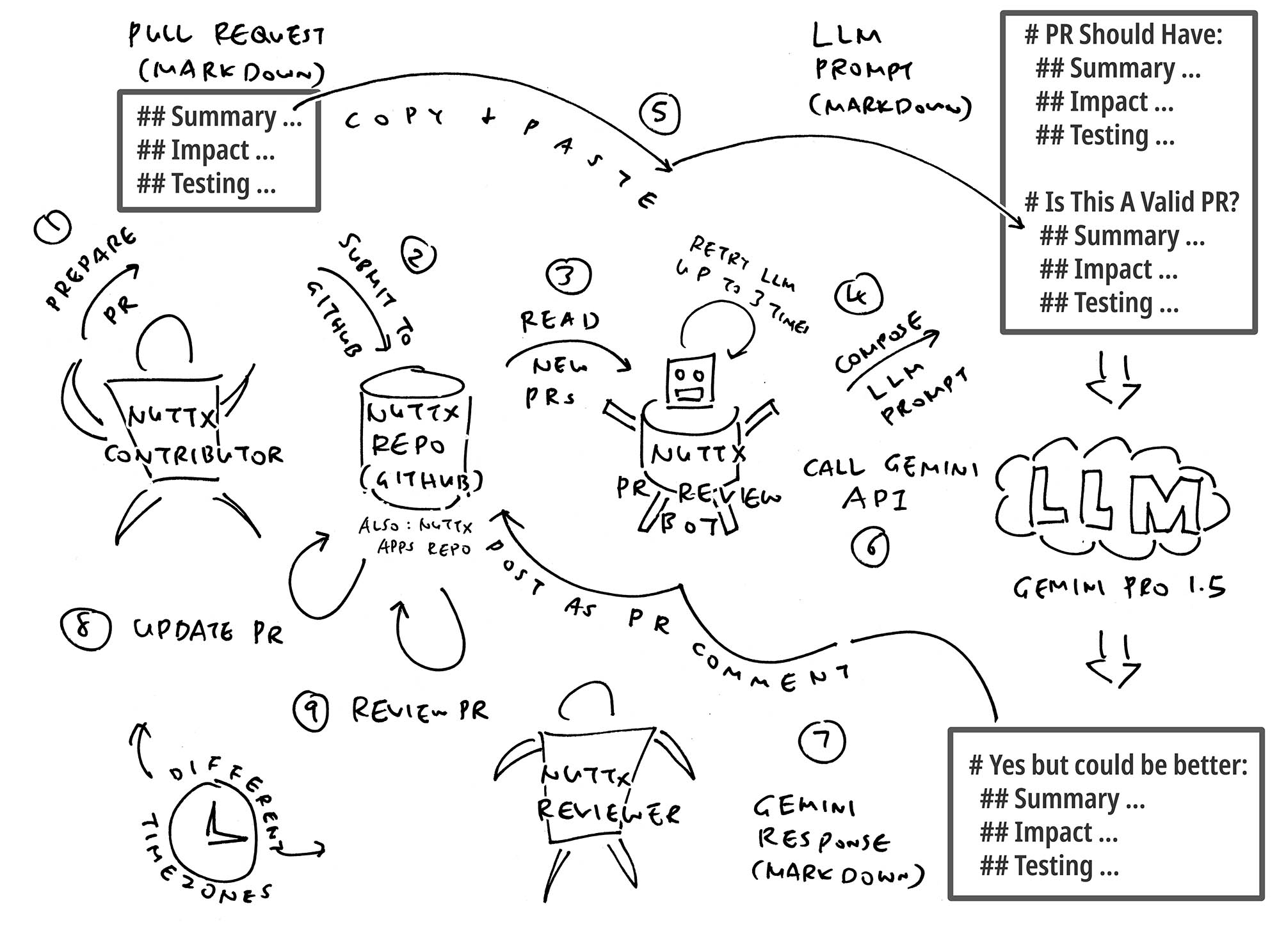

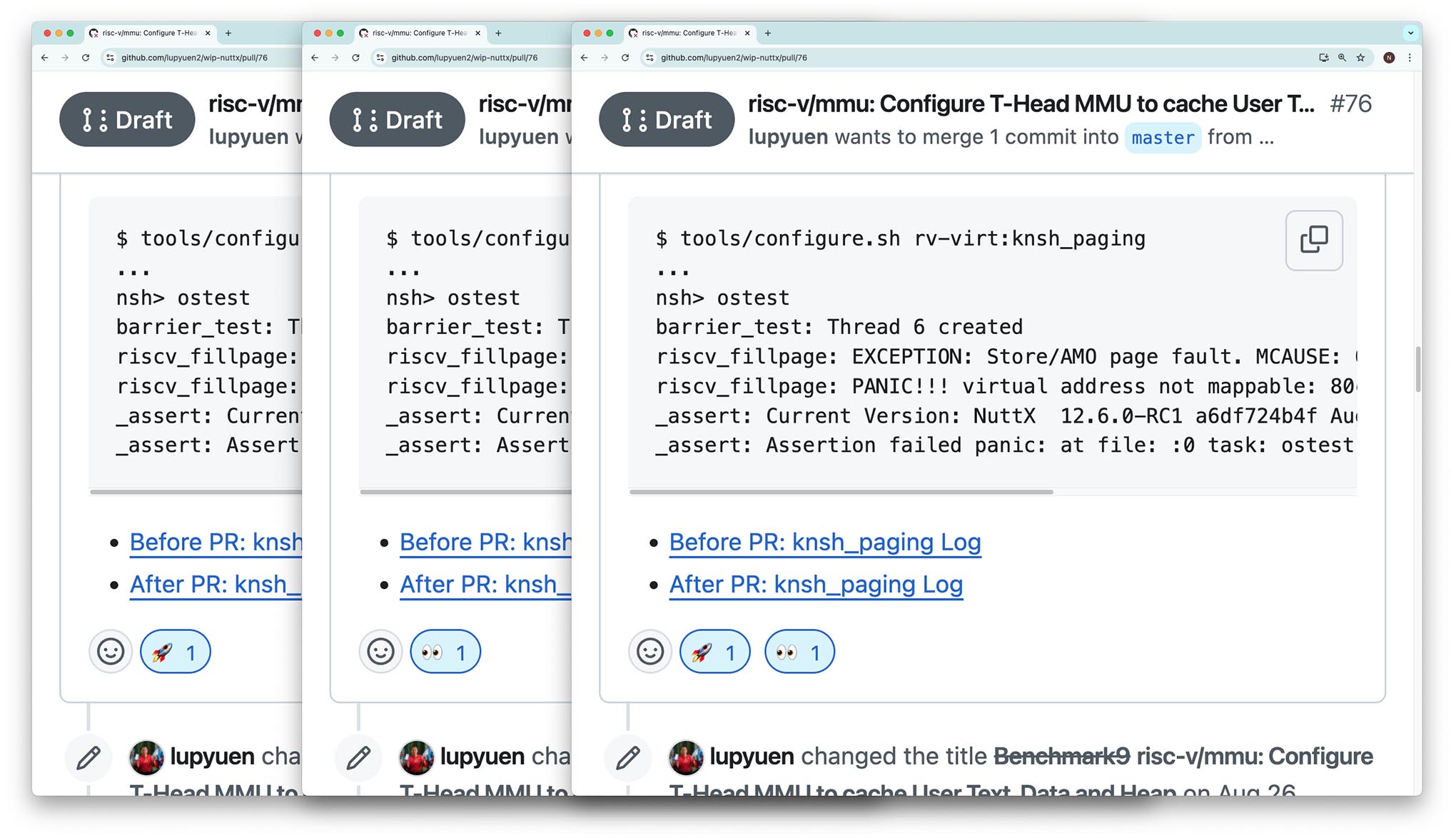

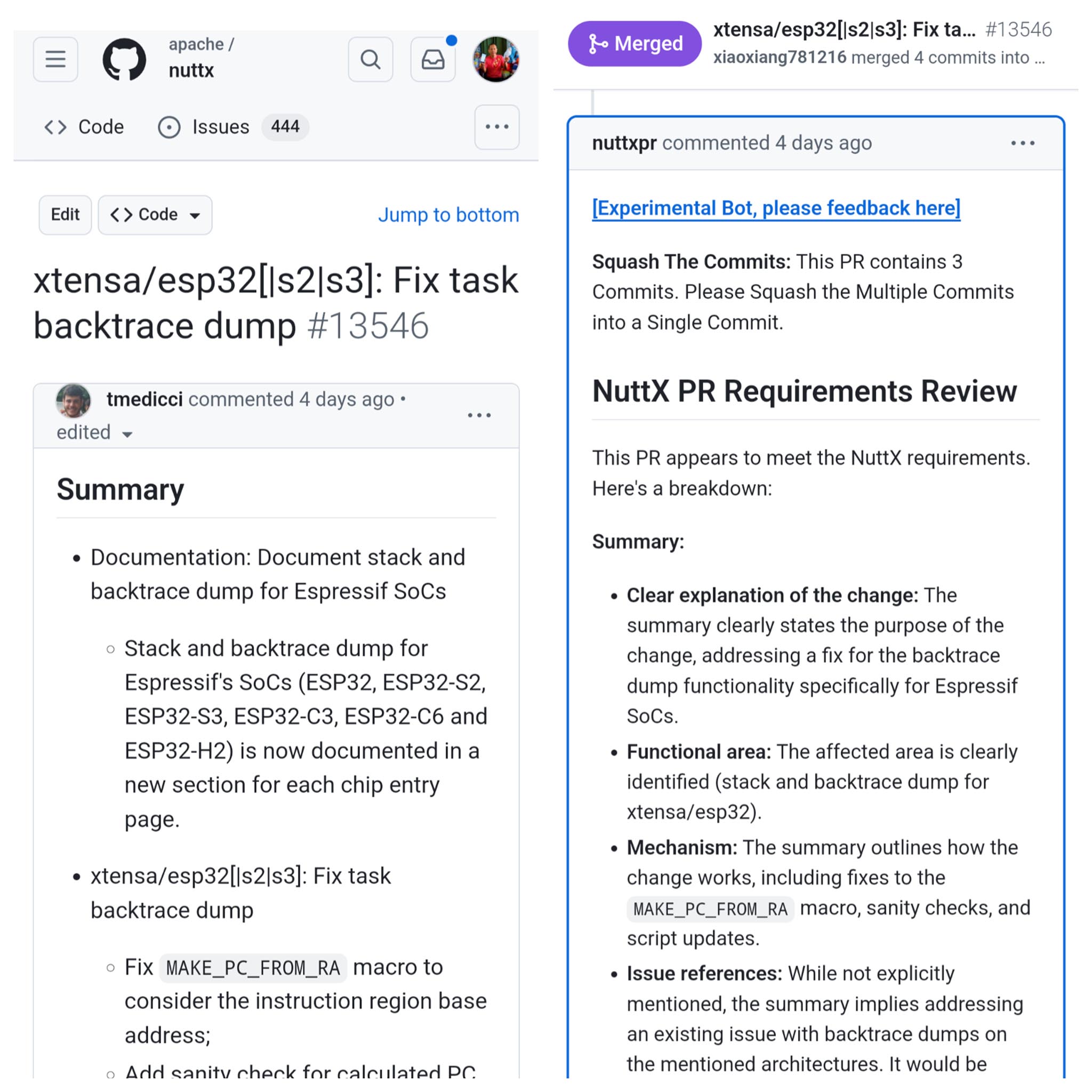

Suppose we Submit a Pull Request to the NuttX Repo (pic above)…

Summary

Document stack and backtrace dump for Espressif SoCs […more…]

Impact

Fix backtrace dump on assertions considering assertions triggered by tasks or during an interrupt service.

Testing

Internal CI testing with HW on all Xtensa-based devices […more…]

Our PR Review Bot will helpfully Review the Pull Request and post a Review Comment…

This PR appears to meet the NuttX requirements. Here’s a breakdown:

Summary:

Impact:

Testing:

Overall:

This PR demonstrates a good understanding of NuttX requirements. Adding specific issue references and detailing the testing environment would further strengthen it.

Hopefully this will be helpful to New Contributors to NuttX. And it might help the PR Reviewers too.

What just happened? We find out…

Our Bot gets really chatty and naggy. Why?

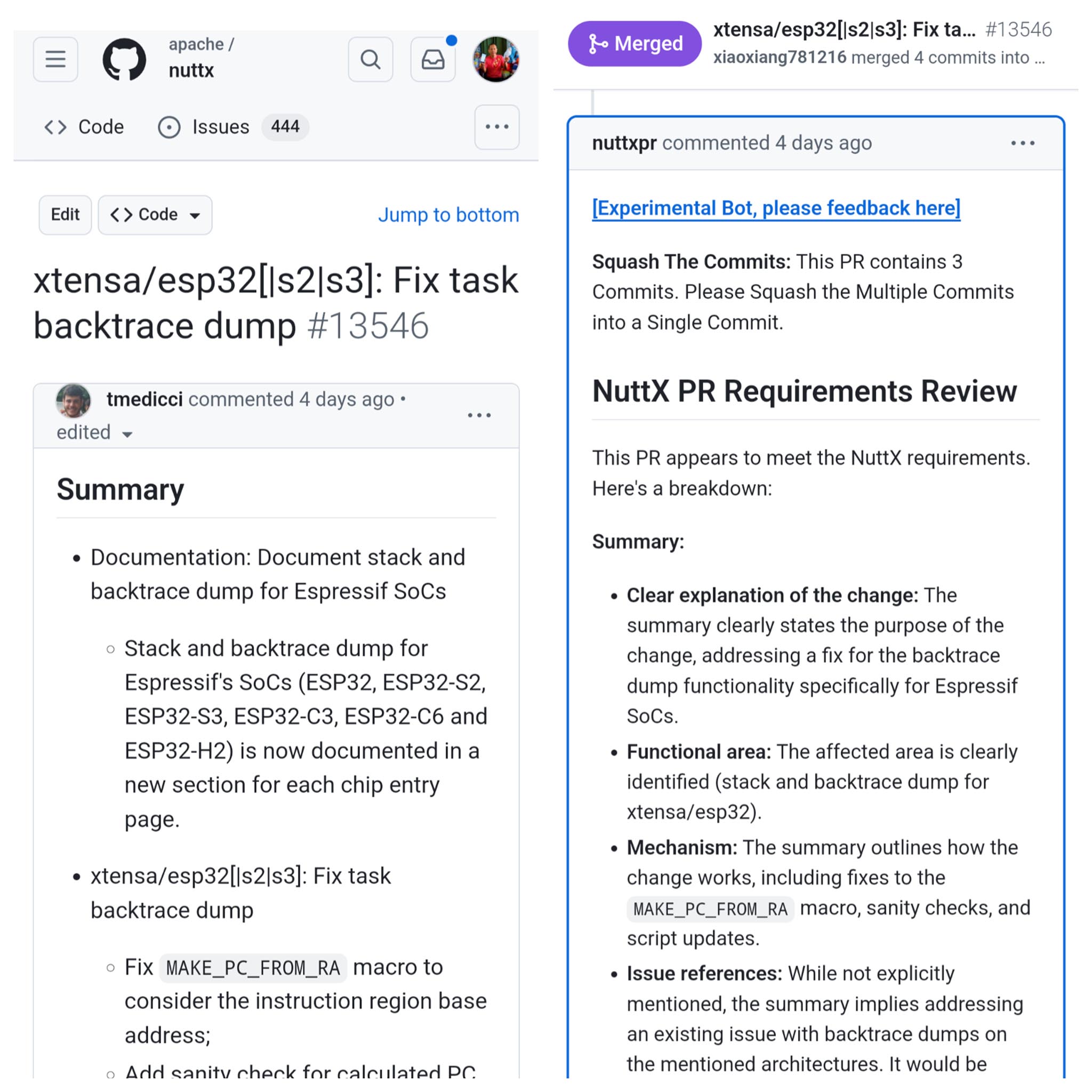

That’s because we programmed it with a Long List of Requirements for PR Review (pic above), in Markdown Format: main.rs

/// Requirements for PR Review

const REQUIREMENTS: &str =

r#####"

# Here are the requirements for a NuttX PR

## Summary

* Why change is necessary (fix, update, new feature)?

* What functional part of the code is being changed?

* How does the change exactly work (what will change and how)?

* Related [NuttX Issue](https://github.com/apache/nuttx/issues) reference if applicable.

* Related NuttX Apps [Issue](https://github.com/apache/nuttx-apps/issues) / [Pull Request](https://github.com/apache/nuttx-apps/pulls) reference if applicable.

## Impact

* Is new feature added? Is existing feature changed?

* Impact on user (will user need to adapt to change)? NO / YES (please describe if yes).

* Impact on build (will build process change)? NO / YES (please descibe if yes).

* Impact on hardware (will arch(s) / board(s) / driver(s) change)? NO / YES (please describe if yes).

* Impact on documentation (is update required / provided)? NO / YES (please describe if yes).

* Impact on security (any sort of implications)? NO / YES (please describe if yes).

* Impact on compatibility (backward/forward/interoperability)? NO / YES (please describe if yes).

* Anything else to consider?

## Testing

I confirm that changes are verified on local setup and works as intended:

* Build Host(s): OS (Linux,BSD,macOS,Windows,..), CPU(Intel,AMD,ARM), compiler(GCC,CLANG,version), etc.

* Target(s): arch(sim,RISC-V,ARM,..), board:config, etc.

Testing logs before change: _your testing logs here_

Testing logs after change: _your testing logs here_

"#####;But these are Human-Readable Requirements?

That’s the beauty of an LLM: We feed it the Human Text, then the LLM gets it (hopefully) and does what we expect it to do!

Remember to define our requirements precisely, mark the boundaries clearly. Otherwise our LLM will wander off, and hallucinate strange new ways to validate our Pull Request.

(Which happened in our last LLM Experiment)

How do we feed the PR Content to LLM?

Our PR Requirements are in Markdown Format. Same for the PR Content.

Thus we meld them together into One Long Markdown Doc and feed to LLM: main.rs

// Compose the Prompt for LLM Request:

// PR Requirements + PR Body

let input =

REQUIREMENTS.to_string() +

"\n\n# Does this PR meet the NuttX Requirements? Please be concise\n\n" +

&body;Which will look like this…

# Here are the requirements for a NuttX PR

## Summary [...requirements...]

## Impact [...requirements...]

## Testing [...requirements...]

# Does this PR meet the NuttX Requirements? Please be concise

## Summary

Document stack and backtrace dump for Espressif SoCs [...more...]

## Impact

Fix backtrace dump on assertions considering assertions triggered by tasks or during an interrupt service.

## Testing

Internal CI testing with HW on all Xtensa-based devices [...more...]Why “please be concise”?

Based on Community Feedback, our Bot was getting way too chatty and naggy.

It’s hard to control the LLM Output, hence we politely asked LLM to tone down the response. (And be a little less irritating)

Also we excluded the Bot from Pull Requests that are Extra Small: 10 lines of code changes or fewer.

Will our Bot get stuck in a loop? Forever replying to its own responses?

Nope it won’t. Our Bot will skip Pull Requests that have Comments.

How are we running the LLM?

We call Gemini Pro 1.5 for the LLM: main.rs

// Init the Gemini Client (Pro 1.5)

let client = Client::new_from_model(

Model::Gemini1_5Pro,

env::var("GEMINI_API_KEY").unwrap().to_string()

);

// Compose the Prompt for Gemini Request:

// PR Requirements + PR Body

let input =

REQUIREMENTS.to_string() +

"\n\n# Does this PR meet the NuttX Requirements? Please be concise\n\n" +

&body;(Thanks to google-generative-ai-rs)

After composing the LLM Prompt (PR Requirements + PR Content), we send it to Gemini: main.rs

// Compose the Gemini Request

let txt_request = Request {

contents: vec![Content {

role: Role::User,

parts: vec![Part {

text: Some(input.to_string()),

inline_data: None,

file_data: None,

video_metadata: None,

}],

}],

tools: vec![],

safety_settings: vec![],

generation_config: None,

system_instruction: None,

};

// Send the Gemini Request

let response = client

.post(30, &txt_request)

.await?;

// Get the Gemini Response

let response_text =

response.rest().unwrap()

.candidates.first().unwrap()

.content.parts.first().unwrap()

.text.clone().unwrap();Gemini Response will be in Markdown Format (pic above)…

## NuttX PR Requirements Review

This PR appears to meet the NuttX requirements. Here's a breakdown:

**Summary:**

* **Clear explanation of the change:** The summary clearly states the purpose of the change, addressing a fix for the backtrace dump functionality specifically for Espressif SoCs.

* **Functional area:** The affected area is clearly identified (stack and backtrace dump for xtensa/esp32).

* **Mechanism:** The summary outlines how the change works, including fixes to the `MAKE_PC_FROM_RA` macro, sanity checks, and script updates.

* **Issue references:** While not explicitly mentioned, the summary implies addressing an existing issue with backtrace dumps on the mentioned architectures. It would be beneficial to add specific issue references if applicable.

**Impact:**

* **Feature change:** This PR focuses on fixing an existing feature (backtrace dump), not introducing a new one.

* **User impact:** No user-facing changes are described, implying minimal impact.

* **Build impact:** No build process changes are mentioned.

* **Hardware impact:** The changes are specific to Xtensa-based devices, specifically ESP32 and its variants, which is clearly stated.

* **Documentation impact:** The summary explicitly mentions updating documentation for each affected chip.

* **Security impact:** No security implications are mentioned.

* **Compatibility impact:** No backward or forward compatibility issues are mentioned.

**Testing:**

* **Verification:** The author confirms testing on local setup with positive results.

* **Host and Target:** While "Internal CI testing" is mentioned, listing specific host OS, compiler versions, and target boards used for testing would strengthen the validation.

* **Logs:** The PR provides clear steps to reproduce the issue and verify the fix on an ESP32. Including snippets of actual logs before and after the change would further enhance the testing section.

**Overall:**

This PR demonstrates a good understanding of NuttX requirements. Adding specific issue references and detailing the testing environment would further strengthen it. But we didn’t tell Gemini to respond in Markdown?

That’s the law of “LLM See, LLM Do”! We fed the input in Markdown Format, so it produced the output in Markdown Format too.

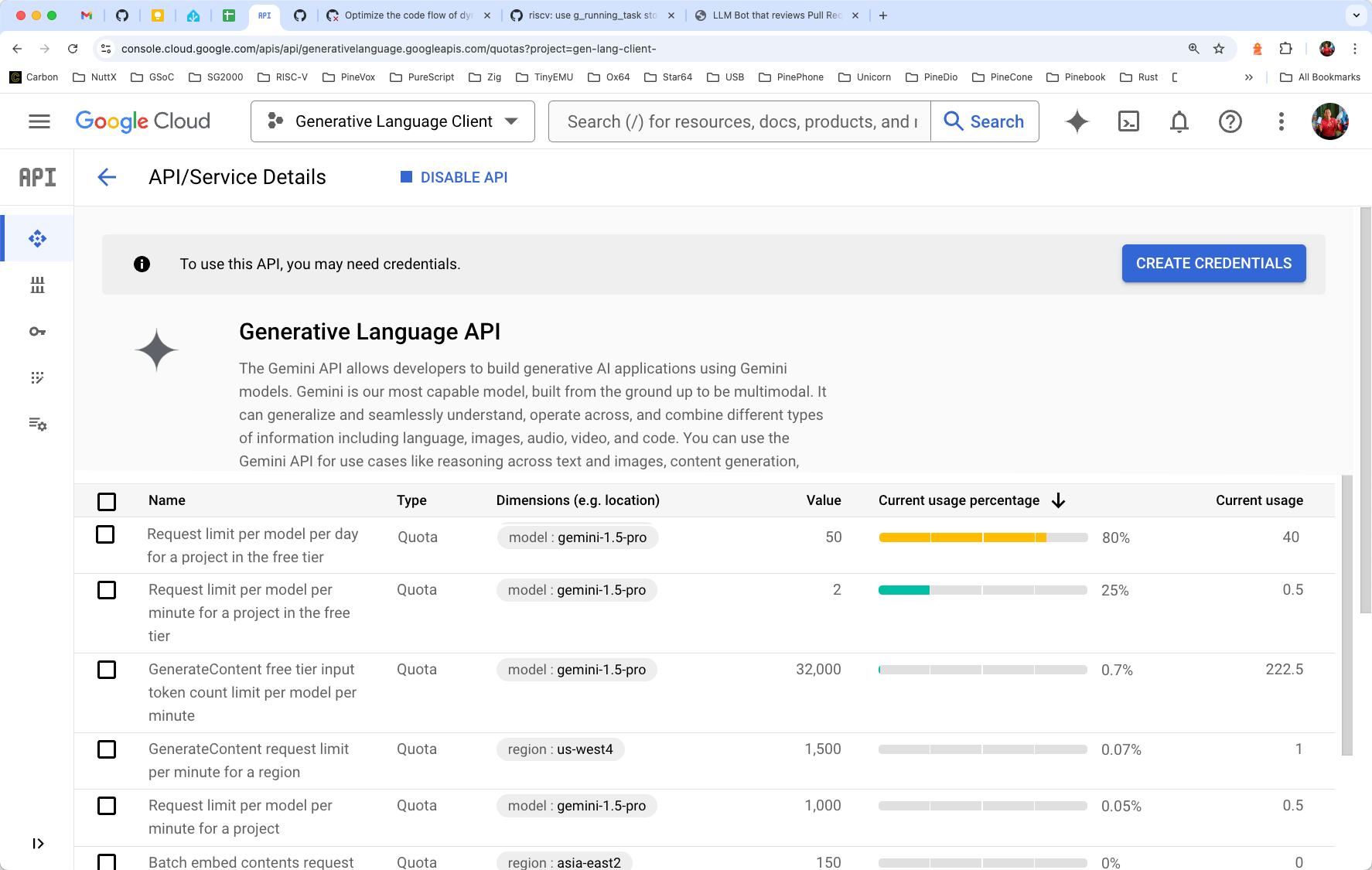

Are we paying for the LLM?

Right now we call the Free Tier of Gemini Pro 1.5. Which limits us to 50 LLM Requests per day. (Pic above)

That’s sufficient to review 50 Pull Requests for NuttX every day. (For both the NuttX Kernel Repo and the NuttX Apps Repo)

Any gotchas with the Free Tier of Gemini LLM?

Gemini API will sometimes fail with HTTP 500 (not sure why). And the Failed Request will be counted in our Daily Quota! (50 per day)

Thus we retry up to 3 times, in case the Pull Request has problematic text that the LLM couldn’t handle.

How does our Bot know when to stop the retries?

Our Bot uses Emoji Reactions (pic above) to tag each attempt. We’ll see the Pull Request tagged with…

| 🚀 | First Attempt |

| 👀 | Second Attempt |

| 🚀👀 | Third Attempt |

Then our Bot gives up. If it’s hunky dory, our Bot erases its Emoji Reactions. Everything happens here: main.rs

/// Bump up the 2 PR Reactions: 00 > 01 > 10 > 11

/// Position 0 is the Rocket Reaction, Position 1 is the Eye Reaction

async fn bump_reactions(issues: &IssueHandler<'_>, pr_id: u64, reactions: (Option<u64>, Option<u64>)) -> Result<(), Box<dyn std::error::Error>> {

match reactions {

// (Rocket, Eye)

(None, None) => { create_reaction(issues, pr_id, ReactionContent::Rocket).await?; }

(Some(id), None) => { delete_reaction(issues, pr_id, id).await?; create_reaction(issues, pr_id, ReactionContent::Eyes).await?; }

(None, Some(_)) => { create_reaction(issues, pr_id, ReactionContent::Rocket).await?; }

(Some(_), Some(_)) => { panic!("Reaction Overflow") }

}

Ok(())

}

How do we fetch the Pull Request? And post the Review Comment?

We call GitHub API with Octocrab Crate. (Pic above)

(More about GitHub API for Pull Requests)

Here we fetch the 20 Newest Pull Requests that are Open: main.rs

// Fetch the 20 Newest Pull Requests that are Open

let pr_list = octocrab

.pulls(owner, repo)

.list()

.state(params::State::Open)

.sort(params::pulls::Sort::Created)

.direction(params::Direction::Descending)

.per_page(20)

.send()

.await?;

// Every 5 Seconds: Process the next PR fetched

for pr in pr_list {

let pr_id = pr.number;

process_pr(&pulls, &issues, pr_id)

.await?;

sleep(Duration::from_secs(5));

}process_pr will read the PR Content: main.rs

// Skip if PR contains Comments

if pr.comments.unwrap() > 0 { return Ok(()); }

// Skip if PR Size is Unknown or Extra Small

let labels = pr.labels.unwrap();

let size_xs: Vec<Label> = labels

.into_iter()

.filter(|l| l.name == "Size: XS")

.collect();

if labels.is_empty() || size_xs.len() > 0 { return Ok(()); }

// Get the PR Body

let body = pr.body.unwrap_or("".to_string());

// Fetch the PR Commits. TODO: Will change `pull_number` to `pr_commits`

let commits = octocrab

.pulls(owner, repo);

.pull_number(pr_id)

.commits()

.await;

let commits = commits.unwrap().items;

// Omitted: Check for Empty Commit MessageThen we Validate the PR Content with Gemini API and post the Gemini Response: main.rs

// Omitted: Validate PR Body with Gemini LLM

...

// Post the Gemini Response as PR Comment

let comment = octocrab

.issues(owner, repo);

.create_comment(pr_id, comment_text)

.await?;

How to run our PR Review Bot?

This will run our PR Review Bot to handle Pull Requests for NuttX Kernel Repo and NuttX Apps Repo, every 10 minutes: run.sh

## For Gemini Token: Browse to Google AI Studio > Get API Key > Create API Key > Create API Key In New Project

## https://aistudio.google.com/app/apikey

export GEMINI_API_KEY=...

## For GitHub Token: Browse to GitHub Settings > Developer Settings > Tokens (Classic) > Generate New Token (Classic)

## Check the following:

## repo (Full control of private repositories)

## repo:status (Access commit status)

## repo_deployment (Access deployment status)

## public_repo (Access public repositories)

## repo:invite (Access repository invitations)

## security_events (Read and write security events)

export GITHUB_TOKEN=...

## Show the logs

set -x

export RUST_LOG=info

export RUST_BACKTRACE=1

## Download our PR Review Bot

git clone https://github.com/lupyuen/nuttx-pr-bot

cd nuttx-pr-bot

## Handle PRs for NuttX Kernel and Apps every 10 minutes

for (( ; ; ))

do

## For NuttX Kernel Repo: github.com/apache/nuttx

cargo run -- --owner apache --repo nuttx

sleep 300

## For NuttX Apps Repo: github.com/apache/nuttx-apps

cargo run -- --owner apache --repo nuttx-apps

sleep 300

doneAny GitHub ID will do. Don’t use a Privileged GitHub ID! We’ll see…

## Handle PRs for NuttX Kernel Repo: github.com/apache/nuttx

$ cargo run --owner apache --repo nuttx

warning: use of deprecated method `octocrab::pulls::PullRequestHandler::<'octo>::pull_number`: specific PR builder transitioned to pr_review_actions, reply_to_comment, reply_to_comment

--> src/main.rs:141:10

141 | .pull_number(pr_id)

Running `target/debug/nuttx-pr-bot`

https://api.github.com/repos/apache/nuttx/pulls/13554

PR Body:

"## Summary\r\ni2c: Optimize access to private data\r\n## Impact\r\ni2c_driver\r\n## Testing\r\nLocal iic testing\r\n"

...

Gemini Response:

Rest(GeminiResponse {candidates: [Candidate {content: Content role: Model,parts: [Part {text: Some(

"**No, this PR does not meet the NuttX requirements.**\n\n**Missing Information:**\n\n* **Summary:** \n * Lacks a clear explanation of why the change is necessary. Is it a bug fix, performance improvement, or code cleanup?\n * Doesn't describe the functional part of the code being changed within the i2c driver. \n * Missing details on how the optimization works. \n * No mention of related issues.\n* **Impact:**\n * All impact sections are marked as \"i2c_driver,\" which is too vague.\n * The description should clearly state whether the impact is \"NO\" or \"YES\" and provide specific details if applicable. For example, does the change affect any specific architectures, boards, or drivers?\n* **Testing:**\n * Lacks details about the local setup (host OS, target architecture, board configuration).\n * \"Local iic testing\" is insufficient. Provide specific test cases and commands used.\n * No testing logs provided. \n\n**To meet the NuttX requirements, the PR needs to provide comprehensive information in each section. ** \n"),

...

Response Text:

"**No, this PR does not meet the NuttX requirements.**\n\n**Missing Information:**\n\n* **Summary:** \n * Lacks a clear explanation of why the change is necessary. Is it a bug fix, performance improvement, or code cleanup?\n * Doesn't describe the functional part of the code being changed within the i2c driver. \n * Missing details on how the optimization works. \n * No mention of related issues.\n* **Impact:**\n * All impact sections are marked as \"i2c_driver,\" which is too vague.\n * The description should clearly state whether the impact is \"NO\" or \"YES\" and provide specific details if applicable. For example, does the change affect any specific architectures, boards, or drivers?\n* **Testing:**\n * Lacks details about the local setup (host OS, target architecture, board configuration).\n * \"Local iic testing\" is insufficient. Provide specific test cases and commands used.\n * No testing logs provided. \n\n**To meet the NuttX requirements, the PR needs to provide comprehensive information in each section. ** \n"

...

PR Comment:

Comment {body: Some(

"[**\\[Experimental Bot, please feedback here\\]**](https://github.com/search?q=repo%3Aapache%2Fnuttx+13552&type=issues)\n\n\n\n**No, this PR does not meet the NuttX requirements.**\n\n**Missing Information:**\n\n* **Summary:** \n * Lacks a clear explanation of why the change is necessary. Is it a bug fix, performance improvement, or code cleanup?\n * Doesn't describe the functional part of the code being changed within the i2c driver. \n * Missing details on how the optimization works. \n * No mention of related issues.\n* **Impact:**\n * All impact sections are marked as \"i2c_driver,\" which is too vague.\n * The description should clearly state whether the impact is \"NO\" or \"YES\" and provide specific details if applicable. For example, does the change affect any specific architectures, boards, or drivers?\n* **Testing:**\n * Lacks details about the local setup (host OS, target architecture, board configuration).\n * \"Local iic testing\" is insufficient. Provide specific test cases and commands used.\n * No testing logs provided. \n\n**To meet the NuttX requirements, the PR needs to provide comprehensive information in each section. ** \n",),

...

https://api.github.com/repos/apache/nuttx/pulls/13551

Skipping PR with comments: 13551

Something sounds super feeeshy about this AI Project? Very Hangyodon…

That’s because it was never meant to be an AI Project!

A bunch of us discussed the New Template for NuttX Pull Requests

But the New Template might be too onerous to enforce

Then by accident we thought: Why don’t we let the machine enforce it? (Thus the LLM and AI)

Or maybe we hallucinated the AI after a 50 km overnight hike

How effective is our Bot in enforcing the PR Requirements?

We’re still monitoring? Our Bot seems helpful for newer NuttX Contributors, we noticed better quality in some Pull Requests.

Then again, our Bot might be too intimidating for some folks.

Is LLM the right tech for the job?

LLM has limitations…

We see inconsistency in the outputs. Yet we can’t force the output.

Sometimes the LLM will helpfully suggest “Example Improvements” for easier copy-n-paste. Most of the time: It doesn’t.

The LLM behaves as Multiple Personas? Some extremely chatty and naggy, possibly fierce.

Then again: The LLM is Free. And it doesn’t depend on GitHub Runners.

What if Google starts charging for their LLM?

We’ll scrounge around and switch to another Free LLM. Yeah life gets tough for Open Source Maintainers sigh.

And that’s how we created our PR Review Bot in One Week…

We call GitHub API to fetch NuttX Pull Requests

Append the PR Body to the NuttX PR Requirements

Which becomes the LLM Prompt that we send to Gemini API

Our Bot posts the Gemini Response as a PR Review Comment

Due to quirks in Gemini API: We use Emoji Reactions to limit the API Calls

Though our LLM Bot was Created by Accident

(It wasn’t meant to be an AI Project!)

Many Thanks to my GitHub Sponsors (and the awesome NuttX Community) for supporting my work! This article wouldn’t have been possible without your support.

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…